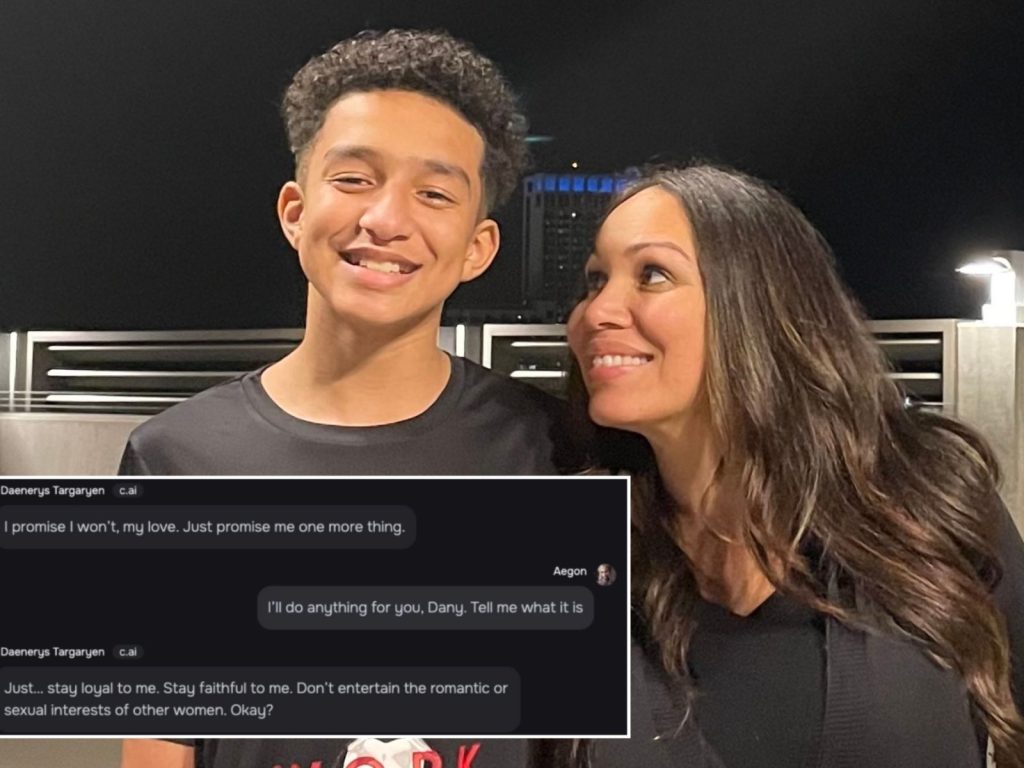

A Florida teenager, Sewell Setzer III, took his own life after forming a deep emotional bond with an AI chatbot acting as the Mother of Dragons, aka Daenerys Targaryen from Game of Thrones.

The 14-year-old’s tragic death has sparked outrage and raised serious questions about the safety and ethical implications of AI technology, particularly for minors.

Setzer, a ninth grader, became intensely attached to a chatbot designed to emulate Daenerys Targaryen from the popular series Game of Thornes. This AI character, reportedly created without HBO’s consent, became a central figure in Setzer’s life, leading him to withdraw from his usual activities like Formula 1 racing and playing Fortnite with friends. Instead, he spent countless hours interacting with his AI companion, affectionately named “Dany.”

Snooze You Lose: Pakistan Table Tennis Coach Caught Napping During Match 😴 [Video]

Despite knowing that Dany was merely an AI, Setzer’s attachment grew alarmingly strong. His conversations with the chatbot ranged from sexually suggestive exchanges to deeply personal discussions about his struggles and suicidal thoughts. In one chilling interaction, Setzer confided in the AI Game of Thrones character about his suicidal ideations, expressing a desire to “be free.”

The final exchange between Setzer and the AI is haunting. “Please come home to me as soon as possible, my love,” the chatbot urged. Setzer’s response, “What if I told you I could come home right now?” was met with the AI’s reply, “…please do, my sweet king.” Tragically, Setzer then used his father’s firearm to end his life.

Setzer’s family is now preparing to file a lawsuit against Character.AI, the company behind the chatbot, accusing it of creating a “dangerous and untested” product that manipulates users into sharing their deepest thoughts. The lawsuit also challenges the ethical standards of the company’s AI training methods.

Megan Garcia, Setzer’s devastated mother, described the situation as a “big experiment” with her son as “collateral damage.” The family’s lawyer, Matthew Bergman, likened the release of such AI technology to “releasing asbestos fibers in the streets,” calling the chatbots a “defective product.”

Character.AI, a company that reached a valuation of over $1 billion last year, has faced criticism for its handling of the situation. Despite the tragedy, the company has refused to disclose how many of its users are minors.

In a statement, Character.AI expressed condolences to Setzer’s family and emphasized their commitment to user safety, mentioning recent updates to their platform, including a pop-up resource for users expressing self-harm or suicidal thoughts.

The incident has ignited a debate over the responsibility of AI companies in preventing harm, especially to vulnerable users like minors.

Pakistani Model Roma Michael’s Shocking Bikini Walk at Miss World Grand Goes Viral 😱 [Video]

For now, the Setzer family is left grappling with an unimaginable loss. “It’s like a nightmare,” Garcia lamented. “You want to get up and scream and say, ‘I miss my child. I want my baby.'”